Actions ~ Transformations

|

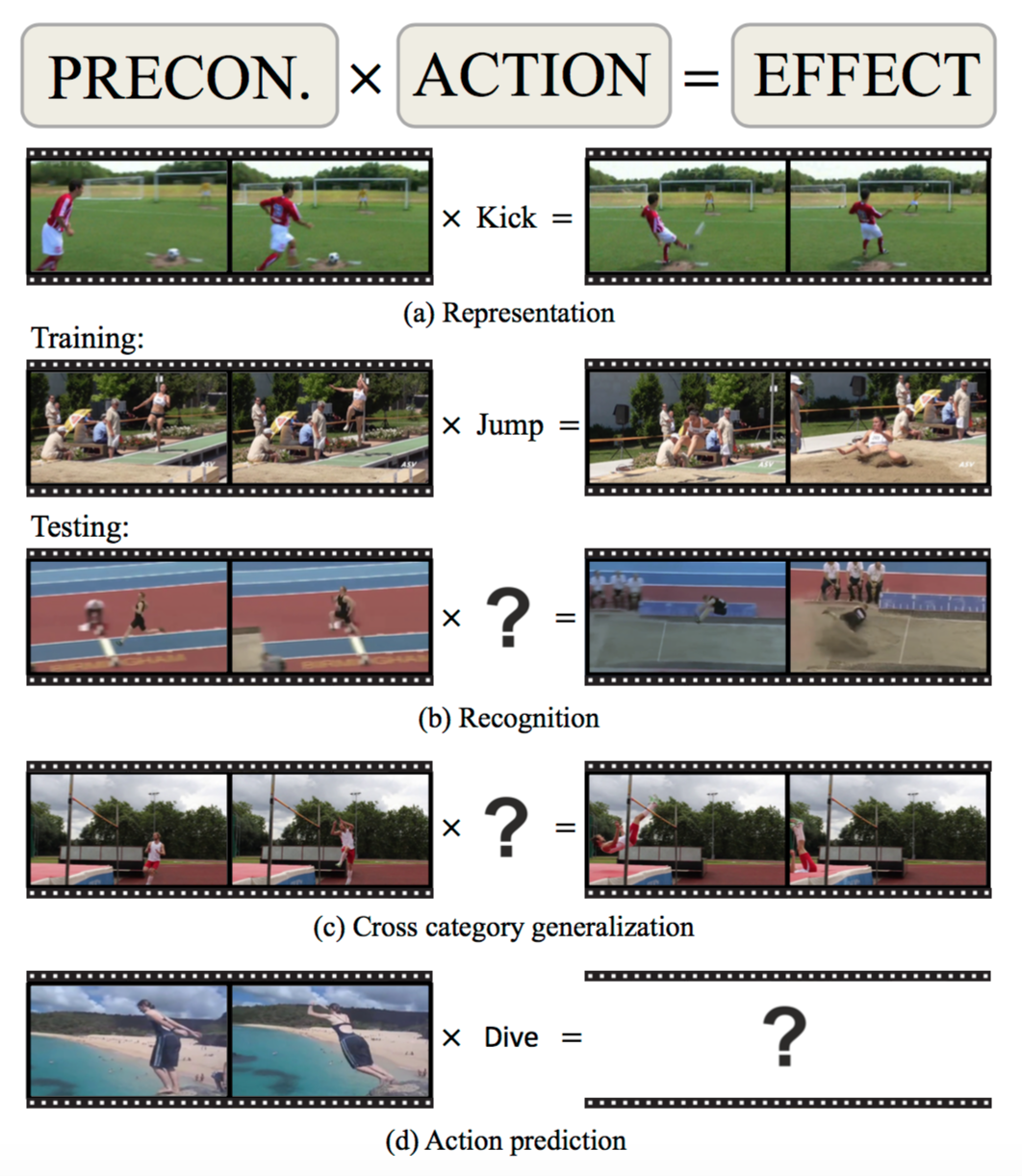

| What defines an action like "kicking ball"? We argue that the true meaning of an action lies in the change or transformation an action brings to the environment. In this paper, we propose a novel representation for actions by modeling an action as a transformation which changes the state of the environment before the action happens (precondition) to the state after the action (effect). Motivated by recent advancements of video representation using deep learning, we design a Siamese network which models the action as a transformation on a high-level feature space. We show that our model gives improvements on standard action recognition datasets including UCF101 and HMDB51. More importantly, our approach is able to generalize beyond learned action categories and shows significant performance improvement on cross-category generalization on our new ACT dataset. |

People

Paper

|

Xiaolong Wang, Ali Farhadi and Abhinav Gupta Actions ~ Transformations Proc. of IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 2016 [PDF] |

Citation

@inproceedings{Wang_Transformation,

Author = {Xiaolong Wang and Ali Farhadi and Abhinav Gupta},

Title = {Actions {\textasciitilde} Transformations},

Booktitle = {CVPR},

Year = {2016},

}

ACT Dataset

|

|

Our ACT dataset consists of 11234 high quality video clips with 43 classes. These 43 classes can be further grouped into 16 super-classes. For example, we have classes such as kicking bag and kicking people, they all belong to the super-class kicking; swinging baseball, swinging golf and swinging tennis can be grouped into swinging. We propose two tasks for our ACT dataset: The ACT dataset can also be downloaded from dropbox. [Video Frames (jpg in 34GB)] [Videos (avi in 14GB)] [Labels] |

Acknowledgements

This work was partially supported by ONR N000141310720, NSF IIS-1338054, ONR MURI N000141010934 and ONR MURI N000141612007. This work was also supported by Allen Distinguished Investigator Award and gift from Google. The authors would like to thank Yahoo! and Nvidia for the compute cluster and GPU donations respectively.The authors would also like to thank Datatang for labeling the ACT dataset.